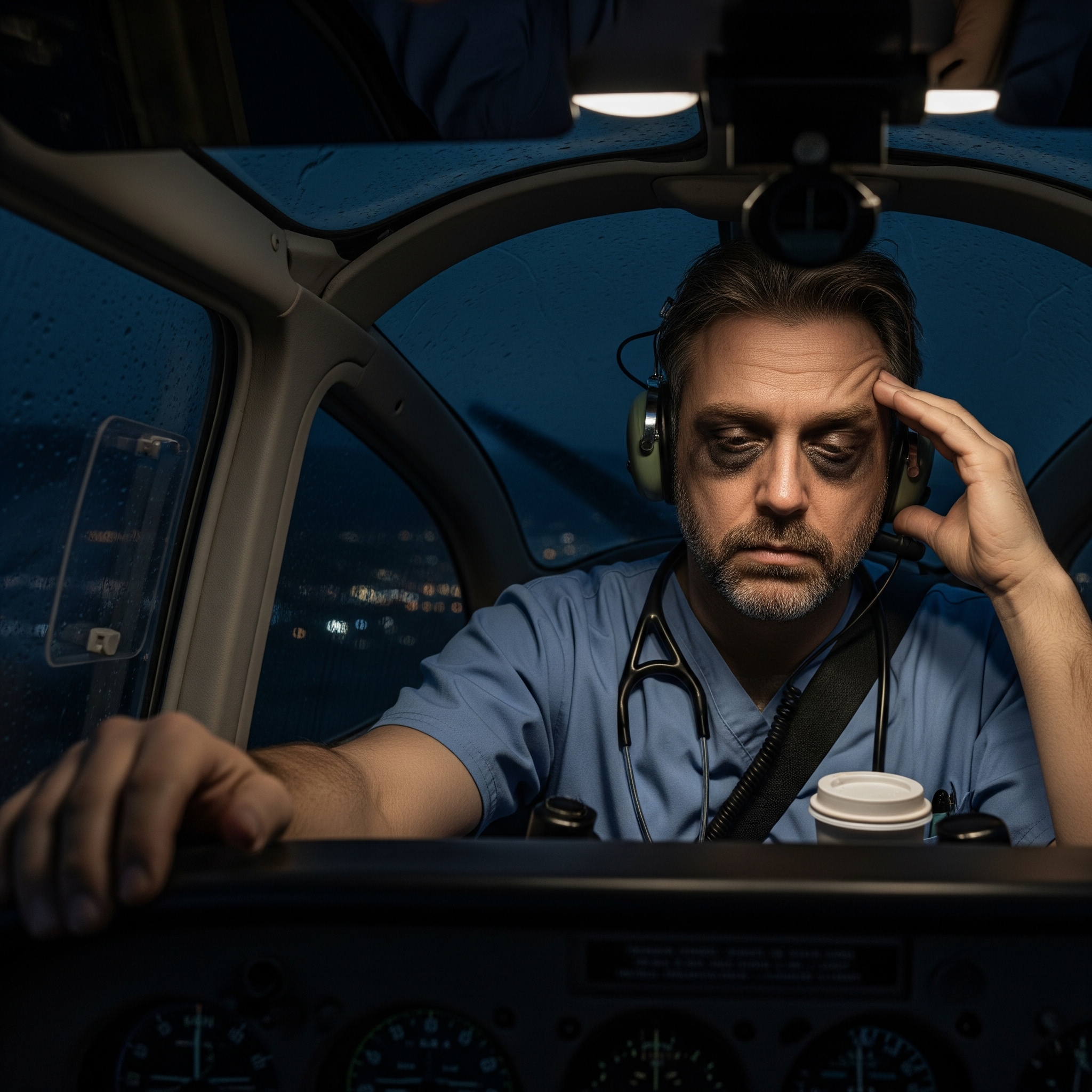

You wouldn’t fly with a tired pilot. Yet you’re perfectly fine with exhausted doctors making life-or-death decisions on three hours of sleep.

I’ve watched this absurd double standard play out countless times. A resident with shaking hands trying to insert an IV after 28 hours on call. That same week, airlines grounded pilots for working too many hours in a month.

We celebrate medical exhaustion as dedication. If a pilot bragged about pulling an all-nighter, we’d have them drug tested immediately.

In healthcare, working yourself into the ground becomes a badge of honor.

When Dedication Becomes Exploitation

The awakening hit me during residency. After complaining about exhaustion following a 36-hour shift, my attending said: “If you can’t handle this, maybe you’re not cut out for medicine.”

That’s when it clicked. This wasn’t about patient care.

We’ve created a twisted mythology where suffering equals caring. The more miserable you appear, the more “dedicated” you seem. But here’s the reality: I made more mistakes when exhausted, not fewer.

My clinical judgment was garbage after hour 20. Yet somehow I was supposed to feel proud about “pushing through.”

I watched a colleague literally fall asleep while suturing a patient. Instead of questioning the system that created this situation, the response was “he needs to build more stamina.”

We’ve convinced ourselves that being a zombie makes you a better doctor.

Hospitals know this is dangerous. They have data showing medical errors correlate with physician fatigue. But they perpetuate this “tough it out” culture because it’s cheaper than hiring adequate staff.

They’ve gotten really good at making us feel guilty for wanting basic human needs like sleep and food.

Enter the Algorithmic Overlords

Now we’re seeing AI systems make care decisions. The Humana lawsuit reveals algorithms denying post-acute care, cutting off payment in fractions of eligible timeframes.

We’re going from one broken system to another broken system, calling it “progress.”

At least when I made mistakes from exhaustion, there was still a human brain processing patient complexity. Now we’re handing decisions to algorithms that can’t tell the difference between a heart attack and heartburn without the right keywords.

These AI systems learn from our already messed-up healthcare system. They’re replicating the same cost-cutting mentalities that created burnout in the first place.

It’s like teaching a robot to be as cynical as an insurance adjuster.

When I made a bad call while exhausted, I could be held accountable. I had a medical license, malpractice insurance, a conscience keeping me up at night.

When AI denies someone’s care and they suffer, who goes to jail? The programmer? The CEO? The algorithm itself?

Automating the Dysfunction

These AI systems get fed historical data that’s basically a masterclass in denying care efficiently. They learn from decades of insurance companies finding creative ways to say “no” to treatments.

The AI learns that if a patient has been in rehab for X days, most insurance companies deny extended care around day Y. So the algorithm automates that cutoff, dressing it up as “evidence-based medicine” instead of what it really is: cost management.

The systems also learn our diagnostic biases. Women and minorities get undertreated for pain, right? The AI learned from that historical data.

It’s now “objectively” recommending less aggressive pain management for those populations because that’s what the training data showed as “normal practice.”

These algorithms learn to game the system faster than humans ever could. They pick up on documentation patterns that correlate with denials. Doctor doesn’t use exactly the right buzzwords? Automatic denial.

We’ve created a robot that’s really good at being a bureaucratic nightmare.

When these systems make bad decisions, everyone shrugs and says “well, the computer said so.” We’re creating technological plausible deniability.

The Billion Dollar Burnout Business

This “heroic exhaustion” costs the healthcare system $4.6 billion annually. Each physician who leaves due to burnout costs organizations $500,000 to $1 million or more.

It’s like paying someone a million dollars to quit because you saved fifty thousand by not hiring adequate support staff.

Meanwhile, emergency medicine physicians report the highest burnout rate at 63%. These are the doctors most likely to see life-or-death cases.

We’re handing critical decisions to AI systems while the most crucial specialists burn out at unprecedented rates.

The people funding these AI systems are the same ones who created the burnout problem. Hospital administrators and insurance executives see doctors as expensive line items to optimize.

As long as healthcare is primarily a business, AI tools will serve business interests, not patient care or physician wellbeing.

What Healthcare Technology Should Actually Do

Instead of replacing clinical judgment, technology should amplify it and make our lives easier. I envision AI that reduces cognitive load rather than adding more hoops to jump through.

Picture an AI system that automatically handles administrative tasks. It writes notes while I talk to patients, handles prior authorizations in the background, manages insurance paperwork without my involvement.

Suddenly I’m not staying late doing documentation. I can go home and sleep.

The AI should make recommendations, not decisions. It could flag potential drug interactions, remind me about screening guidelines I might miss due to fatigue, or suggest when I should take a break because my decision patterns show exhaustion markers.

Technology should protect patients FROM my exhaustion, not enable the system that creates it. Imagine an AI saying “Dr. Smith has worked 18 hours straight and his error rate is climbing. Maybe someone else should handle this complex case.”

Instead of replacing me, it’s watching my back.

This requires design by people who understand practicing medicine, not just optimizing spreadsheets. The goal should be giving me more time with patients and more time to rest.

Breaking the Cycle

Real change requires catastrophic failure so public and undeniable that it can’t be spun as isolated incident. A major malpractice case where exhausted doctors and faulty AI both contribute to preventable death, plastered across news with families demanding answers.

Healthcare leaders only change when the financial cost of NOT changing exceeds the cost of doing right. They can settle malpractice suits quietly and write them off as business costs.

But when stock prices tank because patients fear their hospitals, that’s when you see real change.

We need doctors walking away en masse. Not threatening to quit, but actually leaving medicine or refusing toxic environments. When emergency departments close because they can’t staff them, when surgeries get cancelled due to lack of rested surgeons, administrators will finally listen.

The brutal truth: they’ve got us trapped by our own compassion. It’s emotional blackmail on a systemic level. They know we became doctors because we care about people, so they use that against us.

“You can’t leave, patients need you!” Meanwhile, they create the conditions making us want to leave.

The Moral Obligation Paradox

Staying in a broken system and providing substandard care because I’m exhausted isn’t actually helping patients. When I make mistakes because I haven’t slept, when I rush through appointments because I’m overbooked, when I’m too burned out to really listen, am I fulfilling that moral obligation?

Or am I enabling a system that harms the very people I’m supposed to protect?

Patients deserve doctors who are alert, rested, and have enough time to think through their cases. By accepting these conditions, we’re saying “this level of care is acceptable” when it’s not.

The real moral obligation is refusing to participate in systems that compromise patient safety. If enough of us say “I won’t work 30-hour shifts because it’s dangerous for patients,” we’re not abandoning our duty.

We’re protecting it.

Sometimes the most ethical thing you can do is stop enabling an unethical system, even if it means short-term disruption.

Hope in the Next Generation

I’m cautiously optimistic. The younger generation of doctors isn’t buying into this martyrdom mythology. They’re looking at burned-out millennials and saying “hell no, I’m not doing that to myself.”

They’re demanding work-life balance from day one without shame.

Patients are getting smarter and more vocal too. They’re asking “how long have you been working today, doc?” and “is this AI making decisions about my care?” When patients demand transparency about doctor fatigue levels and AI involvement, that creates market pressure administrators can’t ignore.

Some health systems finally realize burned-out doctors are expensive. High turnover, malpractice suits, recruitment costs all add up. Forward-thinking organizations invest in physician wellness programs and reasonable AI implementation because they’ve done the math.

It’s cheaper in the long run.

We’ll probably need that catastrophic wake-up call first. Something so bad it forces complete reckoning with how we practice medicine. The question is whether we’ll learn from it or find new ways to rationalize dysfunction.

When that moment comes, we’ll have a whole generation of doctors already fed up and ready to build something better. They won’t accept “that’s how we’ve always done it” as an answer.

A Message to Healthcare Leaders

Stop pretending you don’t know what you’re doing. You have the data. You know how fatigue affects medical errors, turnover costs, malpractice risks. But you keep making the same decisions because quarterly numbers look good and someone else deals with consequences.

We’re not your employees. We’re your partners in keeping people alive.

When you treat us like replaceable cogs, you compromise every patient walking through your doors. That exhausted resident with shaking hands? That’s your liability. That AI system denying care to save money? That’s your reputation on the line when it goes wrong.

The doctors you’re burning out today could help you build better systems tomorrow. Instead of partnering with us, you drive us away and wonder why you can’t recruit good physicians.

You create the very staffing shortages that force you to overwork remaining doctors.

The younger generation won’t tolerate this. They’ll leave medicine entirely before accepting toxic culture. You can start treating physicians like human beings now, or explain to your board why your hospital can’t stay open because nobody wants to work there.

Stop hiding behind buzzwords like “efficiency” and “innovation” when you really mean “exploitation.”

We see right through it. Patients are starting to see through it. Eventually your investors will too.